The meteoric rise of artificial intelligence, or AI (not to be confused with the steak sauce … just clarifying, in the event that Linda McMahon is reading) over the past few years has been fascinating and, to be honest, a little unsettling. I first noticed its earliest iterations when it showed up covertly in everyday conveniences like my machine learning-powered vacuum mapping my homes, and smart thermostats studying my habits and temperature preferences. Overnight, it quietly found its way into our search engines, summarizing, digesting, and then regurgitating information quickly and efficiently, so our primitive human brains need not hunt for individual links and websites and process said information ourselves. Then, shortly after the dust of the COVID-19 pandemic settled, NVIDIA stock rocketed moonward, and suddenly every headline, tech podcast, and Reddit thread was buzzing about AI reshaping the world as we know it.

Lately, I’ve been bombarded with ads for lifelike humanoid AI-powered robots, capable of performing household tasks and even companionship. The almighty algorithm must know that I work in healthcare and, while I would love to see technology bring about advances in patient care, it doesn’t mean it wouldn’t be a little jarring to watch a cloth-covered, bipedal robo-butler awkwardly fumbling for Strawberry Ensure and a pudding cup while my patient sits idly on the couch in their assisted living apartment. As a longtime fan of the U.K. television show Black Mirror, I can’t help but wonder: is this where it all begins (or ends)?

With AI’s presence growing everywhere, people are starting to seek comfort in the few places that still feel human – and, for many of us, music has always been one of those strongholds. FutureAudiophile.com has long embraced technological progress while staying true to what makes this hobby special. We generally believe innovation can and will enhance the audiophile experience, not replace it. And, unlike a growing number of review sites out there, our staff consists of 100 percent real people, with real jobs, writing real reviews and stories based on genuine listening experiences. No review bots here, just unfiltered audiophile content straight from the subjective human source. And that’s something we’re very proud of.

But the topic of AI raises a fair question: where does such a disruptive technology fit into the future of high-end audio? If AI is already reshaping how we search, work, and communicate, it’s only a matter of time before it influences how we listen. As one of the resident millennials here at FutureAudiophile.com, I felt compelled to explore that question – and maybe start a larger conversation – before the Singularity hits and/or we are all inevitably plugged into the Matrix. And when it does happen, I certainly hope our robot overlords will forgive our student loans (asking for a friend).

Within the next year, AI will blend into music system operations almost invisibly. It’s already happening to some degree. Smarter room correction tools are eliminating the need for rudimentary test tones and cumbersome microphones attached to cords and cables. Digital Signal Processing (DSP) – once an acronym that made audiophiles recoil in horror – is being reimagined as an enhancement that preserves the character of our loudspeakers, while improving clarity, bass definition, and imaging, all while accounting for and optimizing each individual listening space and listener’s personal preferences.

Digital signal processing has long been a tool for correcting acoustic imperfections, but AI is turning DSP into something far more intelligent and transparent. Instead of applying broad EQ curves or preset audio modes, today’s AI-enhanced amplifiers, integrated amps and receivers are taking room correction to a whole new frontier. They can identify reflections, nulls, and resonances with a level of precision that traditional calibration cannot match, then apply adjustments that preserve the inherent character of the speaker, instead of flattening or limiting it. As these systems learn from ongoing listening sessions, they continuously refine bass management, imaging stability, and tonal balance without the listener ever noticing the work being done behind the scenes. That said, this exciting new technology isn’t without its flaws and may not be for everyone. And, while most mainstream receivers from brands like Marantz and Denon include basic room correction out of the box, unlocking the most powerful DSP tools, such as Audyssey MultEQ-X or Dirac Live, often requires extra licenses or recurring and tiered subscription fees.

Looking ahead, AI-driven DSP is poised to become even more personalized and predictive. Instead of running a calibration once and hoping for the best, future processors will analyze listener position, content type, amplitude, and even individual hearing profiles continuously, adjusting the sound in real time. We’re already getting a glimpse of this future with bleeding-edge tech like Dirac ART, which uses your system’s speakers to actively counteract room issues, and Anthem’s ARC Genesis software that learns more about a room’s acoustics with every revision. Imagine a system that knows when you’ve shifted a foot to the left and quietly corrects image drift, or recognizes that you like brighter dialogue for movies but a warmer presentation for vinyl. Suddenly, amplification starts to feel less like a fixed piece of hardware and more like a living system. The goal shouldn’t be to rewrite a speaker’s voice, but to let it perform exactly as the designer intended, no matter what room it ends up in. And if AI can deliver that with zero menu diving? Count me in.

Music discovery will undergo a significant overhaul. It’s already happening, if you haven’t noticed – just check your favorite streaming service’s latest software update. More than likely, a beta version of an AI algorithmic assistant has been integrated, probably without you even noticing. And these AI-powered recommendation engines are no longer just sorting by genre, tempo, and the length and silkiness of a lead singer’s beard. They’re analyzing emotional, structural, and tonal qualities to deliver hyper-personalized suggestions. Ask an AI assistant to find you new music based on your preferences, and you might uncover artists and albums it would take months (or years) of browsing forums and arguing with fellow music nerds to stumble across. The technology is far from perfect and is at times laughably inaccurate, but the prospect of super-smart, AI-inspired music discovery is certainly an interesting development. The real question becomes, though: should music discovery remain a fully self-guided journey, or is it acceptable to let almighty algorithms chart the course for us? That one’s personal, and to be honest, I’m still figuring out where I stand.

AI For the Audiophile Over the Next Five Years …

In five years, I predict AI will fundamentally reshape how we shop for, research and set up our audiophile systems. Manufacturers might use predictive modeling to generate “digital replicas” of listening spaces, letting buyers hear simulated versions of their personal listening spaces before they make a purchase. Just plug in your dimensions, scan a photo of your space, room treatments and all, close your eyes, and voila! You’re demoing your next piece of gear as if you were sitting on your favorite couch in your personal musical sweet spot.

Product recommendations and reviews (gulp) will likely also become hyper-personalized, guided by psychoacoustic profiles unique to each listener, compliments of cross-app data collection. Data tracking across all aspects of life will form a digital profile of your tastes in order to send targeted products, music, and software your way. Sounds invasive, but it’s already happening in basically every other aspect of our lives, so it’s only a matter of time before it finds its way into the audiophile part of your brain.

And finally, music production itself is poised for a major shift. Creators are already using AI-powered tools to mix music quickly and with incredible precision and ease. Meanwhile, AI-generated music is becoming increasingly difficult to distinguish from mainstream releases – a little unsettling, sure, but also a clear sign of how far this technology has already infiltrated the music world. Even archival restoration is being reimagined through AI-driven remastering, opening the door to sonic improvements we once thought impossible, while at the same time forcing us to wrestle with important questions about authorship, artistic intent, and where the “real” music ends and the machine begins. The copyright issues are a nightmare, but it is likely that these laws need to be reengineered for the modern era if our politicians can see the issues facing their constituents.

AI For the Audiophile Over the Next 10 Years …

10 years from now, AI could redefine high-end audio entirely. Real-time personalized mastering may allow users to shape tonal balance, dynamics, and spatial cues to their liking without compromising source integrity. Spatial audio advancements may make physical speaker count and size far less relevant, as “venue simulation” becomes commonplace – whether that’s the mezzanine of Carnegie Hall or the front row of a concert that only ever existed in the listener’s mind.

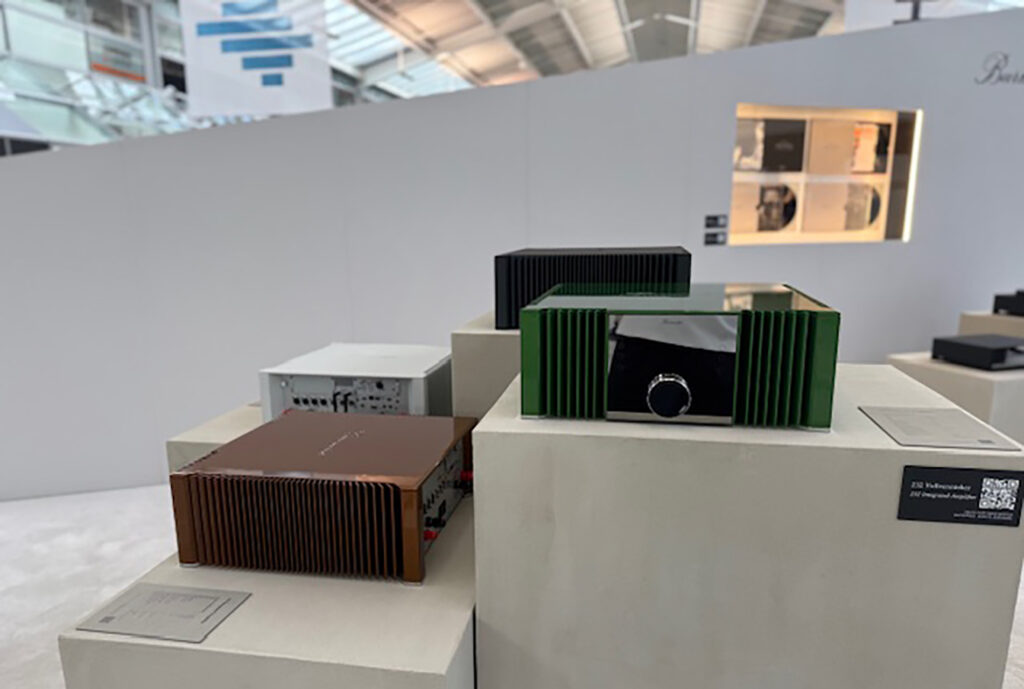

Virtualized audiophile components could emerge as well, with amplifiers, DACs, and even speaker characteristics modeled so accurately that expensive hardware becomes optional, though there will always be a niche market for bells and whistles and over-the-top designs. Music itself may become fluid, alterable, and partially user-controlled or even generated – an exciting and also worrying prospect that will push boundaries of creative integrity. Think “Choose Your Own Adventure,” but for every song in your catalogue. Whether that’s a good thing is entirely up to you, the listener. Not sure I’m ready to go there quite yet.

Hearing aid technology, something near and dear to me, is poised for one of the biggest AI-driven revolutions, which will profoundly affect the predictably aging audiophile demographic. Over the next decade, hearing aids (products I deal with on a daily basis in my day job) will evolve from somewhat simple amplification devices into intelligent listening companions capable of understanding the wearer’s intent. AI-powered signal processing will separate speech from noise more naturally than ever, adapting in real time to different acoustic environments –restaurants, concerts, even busy city streets, without constant manual adjustments. Personalized sound profiles will update continually based on biometrics and neural feedback, creating a level of clarity and listening comfort that feels almost telepathic. New developments in tinnitus reduction are also on the horizon, something I, as a tinnitus sufferer, await with deep excitement. We will also likely see seamless smart integration with headphones and home audio systems, blurring the line between assistive tech and high-end personal audio. For millions with hearing loss – including the many audiophiles who simply don’t talk about it – AI could restore not just audibility, but the emotional nuance and musical detail that made listening joyful in the first place.

Despite all this rapid change, AI won’t strip away what makes us audiophiles. Our passion has never been rooted in microchips, machines, or algorithms, but rather in emotion and connection to music. It’s the joy of hearing the subtle details in a track we’ve listened to a hundred times. It’s the satisfaction of tuning your dream system to perfection, only to take it all apart and start all over again just for the fun of it. It’s the stories behind our gear and our favorite artists, and the commitment to listening as an experience worth prioritizing, versus just humming along to background noise.

AI may widen the pathway into high-performance audio and remove long-standing barriers that made the hobby feel intimidating or exclusive. Some will resist, just like many did with Class-D amps, CDs, mp3s, and music streaming. Others will embrace every new step forward, and most will settle somewhere in the middle.

If this hobby is a lifelong pursuit, AI is enhancing the roadmap and potentially adding exponentially more routes to explore. Systems will be smarter. Music discovery will be richer and more complex. Personalization will reach new heights we couldn’t imagine even a decade ago. And throughout all of it, the goal remains unchanged: to feel music more deeply and form a connection with it – something that is increasingly fleeting these days, across all forms of media and art.

For as long as the hobby has existed, being an audiophile has been about two core pursuits: chasing better sound and debating that sound with anyone willing to listen. Now, AI is emerging as the most significant disruptor since the shift from analog to digital. It’s already altering the way we discover music, evaluate the performance of our gear, and optimize our systems. The question is no longer ifAI will influence our hobby, but how fast the transformation will happen. AI may not have a soul – not yet, anyway – but it might just help audiophiles better connect with theirs. If that’s the case, I’m willing to see what it can do.

What are your thoughts on AI and how it might transform the audiophile hobby? Where do you think our hobby will be in 10+ years, with or without AI? Do you think AI is overblown or a bigger deal than the Internet was in the early 1990s? Let us know and we will post your comments ASAP.

First and foremost, audiophiles are tinkerers. We like building a system, we like hearing how a new product changes the character of our setup. For better or worse. That said, I don’t think audiophiles would be willing to cede the power of change to an algorithm. We like feeling that we can make the adjustments we want to hear, rather than just sitting back and allowing a chunk of code make adjustments for us. Don’t get me wrong, I use AI every day. But I use it as a tool, not an end to end solution. Tinkerers are gonna tink. I don’t think that will ever go away.

I believe there is a Taylor Swift lyric in there somewhere … 🙂

I predict that in 10 years or less, China will dominate both the AI and mid-fi/hi-fi mfgr industries, among other things, and that ain’t steak sauce.

I predict in 10 years or less, we will ban Chinese products in USA. Hopefully.

Like Trump ties?

Eric! Dude! You review and know a lot about hearing aids?! And you’re an audiophile?! Where have you been the last 4 years that I’ve had a hearing aid. I’m a 64-year-old lifelong audiophle that experienced huge disappointment when I started losing my hearing about 5 years ago and my formerly awesome audio system started sounding like crap. In fact, I spent thousands on new equipment before I realized it was my hearing that was the problem. I researched the heck out of hearing aids, and audiologists to find one that not only knew about hearing aids, but also about music and most importantly about music reproduction that is important to us Audiophiles. I ended up finding several audiologists that were into music, and worked in the music industry, and fit some very famous musicians with IEMs, but unfortunately none that were audiophiles also, which is a little bit different than a musician. I ended up with Widex Moment 440s, which help a lot, but I’m still severely disappointed with listening to music. You have any suggestions in the Southern California or even Western United States area of any audiologists that are also audiophiles?

Synergistic Research just introduced a room tuning suite of products, whereby you speak to your Audio system directly and tell it how you want your sound to change. It learns your taste over time and can adjust automatically for each song and mastering type. Its here.

Synergistic Research is sadly a product that we DO NOT RECOMMEND for any readers at FutureAudiophile.com

Mr. Denny’s antics (making fun of people for catching COVID on youtube.com for example) and voodoo products are just not something that I would ever invest in. Readers can do what they want but we just try to steer clear of them.

Beyond signal processing, forward-thinking designers are quietly advancing power amplifier and power supply technology to treat the audio system as a coherent whole rather than a collection of isolated parts. Traditional, single-function power supplies and amplifiers are giving way to intelligent, system-wide control and monitoring that optimizes how power is delivered under real musical conditions. The result is greater efficiency and power density, but more importantly, lower noise, improved dynamic response, and increased transparency—translating directly into more lifelike sound and greater long-term value for the listener.

A lot of this article focuses on things like smarter playlists, better recommendations, metadata cleanup, and library organization. All useful features — but none of that really touches the audiophile part of the hobby.

From where I sit, AI isn’t going to replace:

trained listening

a clean, simple source chain

understanding playback paths

evaluating mastering differences

hearing how gear behaves in the real world

AI can help with data.

It can’t replace experience.

Where AI will matter is behind the scenes:

remastering and restoration

stem separation

noise reduction

dynamic range recovery

upmixing and spatial formats

archival work

Those things actually affect how music sounds, not just how it’s delivered.

But even then, AI won’t change the fundamentals of honest listening.

It won’t tell you what sounds natural.

It won’t decide what’s accurate.

It won’t replace a simple, transparent chain and a pair of trained ears.

So yes — AI will touch the hobby.

But the heart of the audiophile experience stays the same:

sit down, listen intentionally, and trust what you hear.